This is a recap of Situational Awareness.

Important to know before the recap.

What is AGI and SuperIntelligence?

As I’ve shared in previous essays – binary definitions and terms like ‘Intelligence’ and ‘AGI’ are misleading.They are better thought of as existing on a spectrum – narrow at one end, broad or more general at the other.

Current AI is toward the narrow end – good at summarizing existing scientific theories; not so good at formulating new ideas or designing novel experiments to test them.

[a]

Most smart people agree, at some point in the near’ish future, AI will move towards (and beyond) human level general intelligence on that spectrum.

It’s not the ‘if’, but the ‘when’ that is most interesting.

Some predict that we are on track for this to happen very soon, others think we’re a long way off.

If the very soon camp is right, ie, we move further along the spectrum to ‘vastly smarter than human, general AI within the decade’ things are going to get wild.

Situational Awareness

‘Situational Awareness’ is a paper presenting the case for how and why we’re likely to progress toward the super intelligence end of the spectrum faster than most are expecting.

The predictions in the paper are insane.

There are many bottlenecks and arguments for why progress to Artificial General Intelligence and beyond won’t happen within the decade.

But viewed through the lens of the enormous economic and geopolitical forces likely to soon be involved, they seem, at the least, plausible – and definitely worth considering.

This is my attempt at condensing and simplifying the paper.

I reworded and mashed some stuff together, so please read the original for full context.

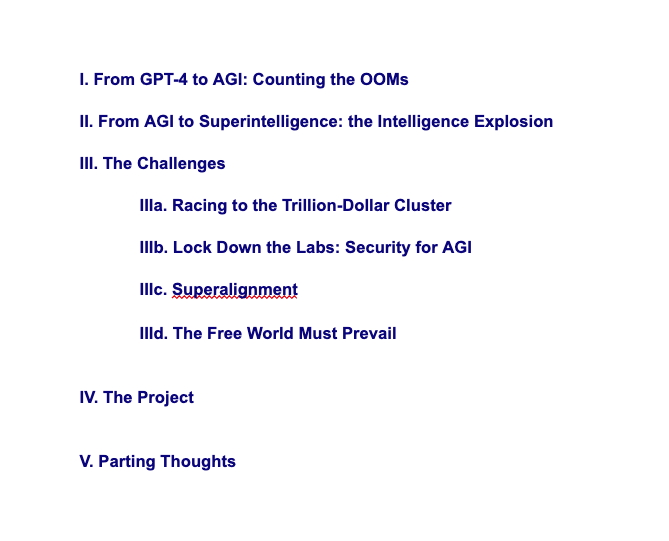

Four main parts to the paper [b]:

- Chapter 1: From Current AI to AGI (OOMs of Progress)

- Chapter 2: From AGI to SuperIntelligence (The Intelligence Explosion)

- Chapter 3: Challenges Along the Way (Trillion Dollar Cluster, Security and Alignment)

- Chapter 4: The ‘Project’ (The AGI Arms Race)

Chapter 1: From Current AI to AGI (OOMs of Progress)

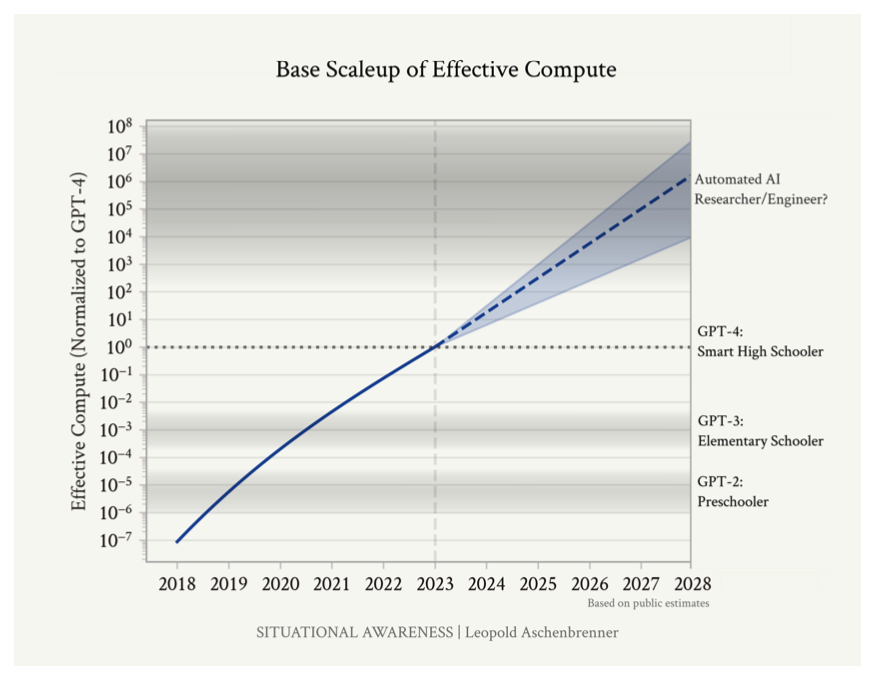

- Predicting AGI by 2027 (OOMs): The major claim, for the first chapter (and the entire paper) is that following the current rate of progress, we could realistically have Artificial General Intelligence by 2027. Progress toward AGI should be viewed in OOMs – an Order of Magnitude (10x) improvements. [1]

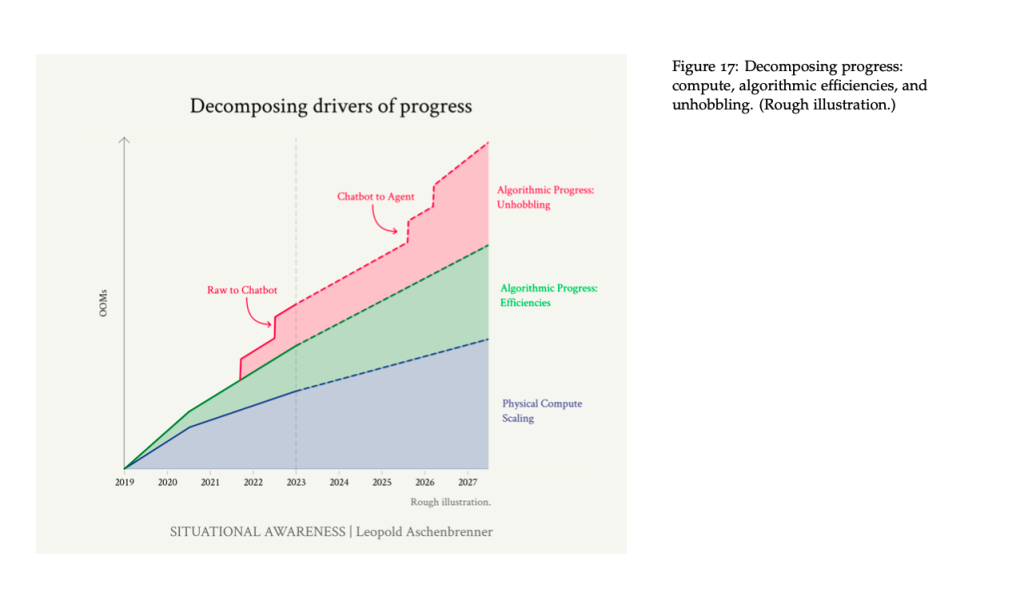

- Compute and Algorithmic Progress: You basically need two things to continue progress along the intelligence spectrum (1) Increased Compute – to train and run the models, and (2) Algorithmic Improvements – improvements in the way AI learns and performs. Over the past decade, we’ve had roughly an OOM of increase in combined compute and Algo improvements ever year – if this continues, we’ll almost certainly move along that intelligence spectrum rapidly. [2]

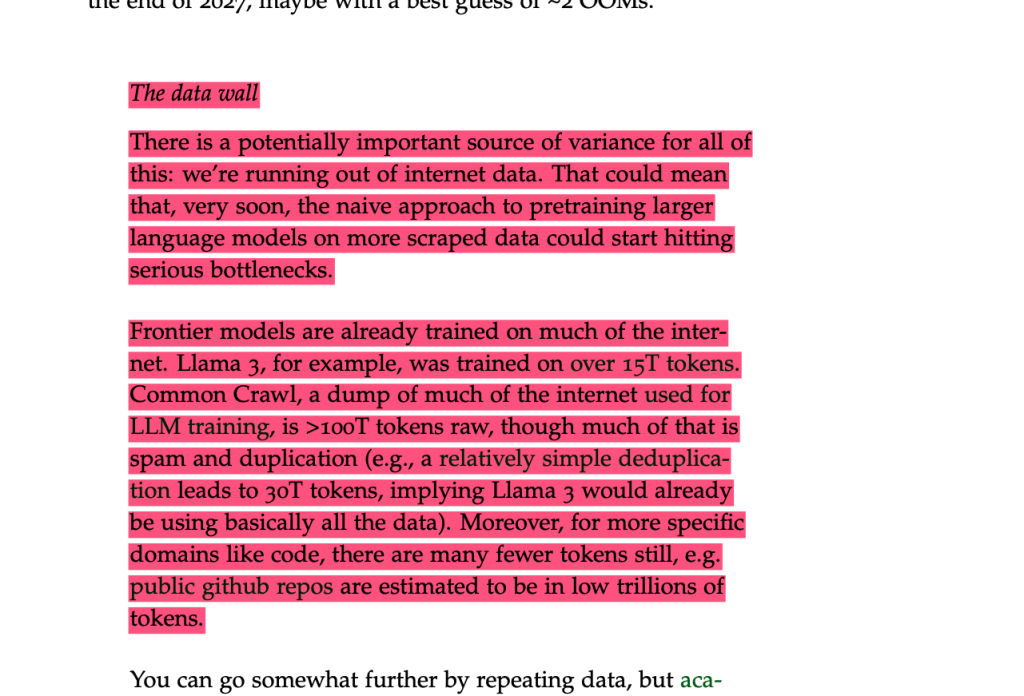

- Uncertainties, bottlenecks and the Data Wall: He hedges everything by emphasizing ‘steep error bars in both directions’ – meaning, it’s possible we move way faster, or way slower along the spectrum due to a number of variables. The data wall for example – models are currently trained on vast amounts of internet data, and we are basically running out of internet to train the models on. But on the other side of this, very smart people and very well resourced companies are working on novel ways to get around this – like the creation of higher quality synthetic data. [3]

- ‘Unhobblings’ – AI Cheat Codes: There are also ‘Unhobblings’, which are basically cheat codes to unlock ‘latent’ abilities in the model which require no extra compute or cost – it appears there are still a bunch of easy unlocks like this which could not only keep us on track, but turbo charge algorithmic improvements. [4]

- Increasing Divergence from leading AI Labs: There are increasing incentives for leading labs to *not share information with the outside world. This is only going to intensify, which will likely create a divergence between how leading companies approach creating AGI, and how the rest of us muggles make sense of what is happening behind the curtains. [5]

- Say Goodbye to Your Job: Most people envision an advanced ChatBot version of ChatGPT. But what we’ll likely get, is a more advanced remote coworker, who will basically start sending many white collar workers on permanent vacation. [6]

Chapter 2: From AGI to SuperIntelligence (The Intelligence Explosion)

- The Intelligence Explosion: Imagine reading one textbook this year on a subject. Next year, you read ten textbooks AND your ability to understand and conceptualize the ideas improves 10x. By year 10, you are effectively reading, writing and comprehending the equivalent of 10 billion textbooks in a single year – with an ability to understand and synthesize information beyond anything a human could ever imagine. This is a very crude way to understand what we might be in for. Right now, half the progress in AI (algorithmic improvements) is being pushed forward by hundreds of human AI researchers – biological meat monkeys. Imagine if we had millions of AI researchers working around the clock – no workplace diversity meetings, onboardings or toilet breaks. It seems the possible if we reach the advanced advanced AI remote worker. [7]

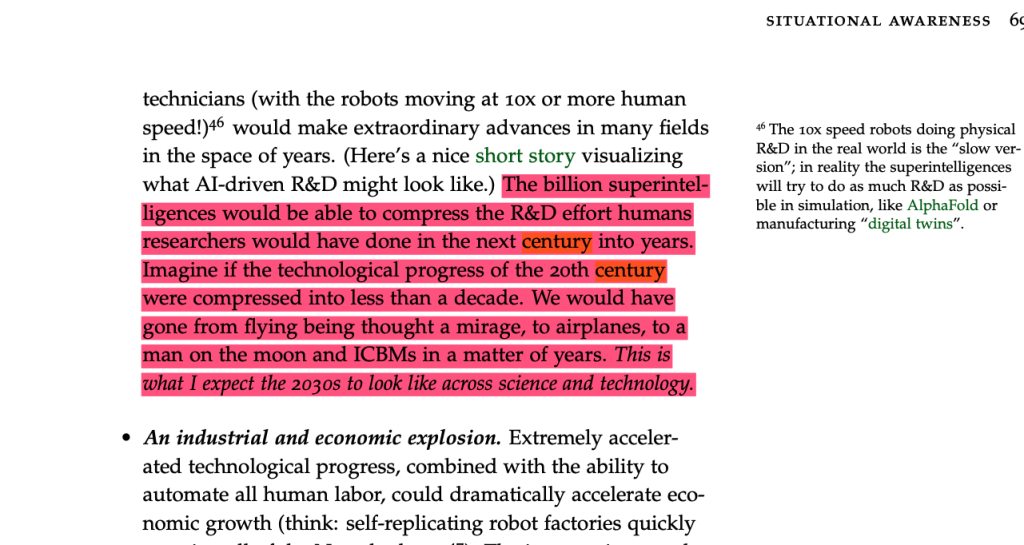

- Expect a 10x Advanced Researcher by 2028: Whether or not you agree with specific timelines, you have to confront the possibility that we’ll have some form of vastly-smarter-than-human intelligence within the decade. Overall, there will be limitations on both compute and algorithmic improvements, but we can’t rule out an intelligence explosion kicking off before 2027. Expect the progress to be slow, expect challenges, but realistically, also expect:

- Automated engineers by 2026/2027

- Automated researchers by 2027/2028

- 10x Advanced Researchers by 2028/2029 [8]

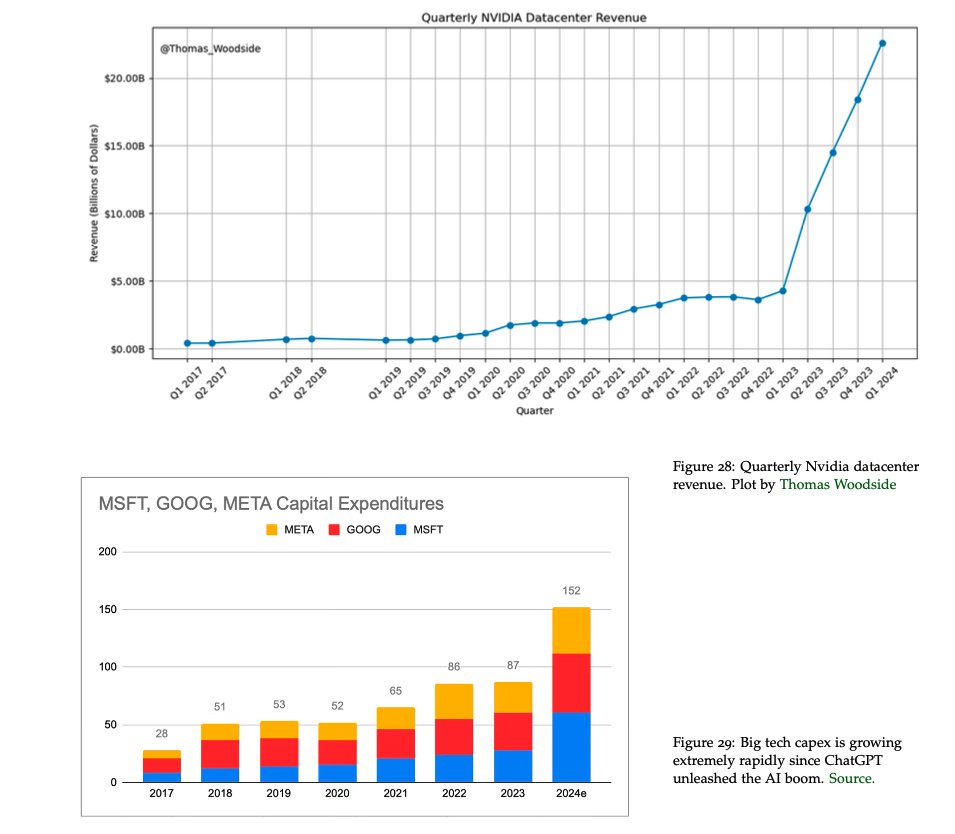

- 100 Years of Progress compressed into less than a Decade: The 10x researcher will rapidly expand R & D in almost every field. Think a compression of 100 years into less than a decade. From not imagining it possible for a human to fly, to landing a man on the moon, to ballistic missiles and drones compressed into a few years. [9]

- We’ll solve Robotics: A lot of people think ‘you need robotics’, robotics is gnarly – but once we have the AI remote worker, we can basically compress a decade worth of remote research into less than a year (this would be conservative end). We would then, more than likely, be able to solve the gnarly problem of robotics – and then robots are officially flipping your burgers and changing nappies. [10]

Chapter 3: Challenges Along the Way (Trillion Dollar Cluster, Security and Alignment)

Chapter three was split into three sections:

- Racing to the trillion dollar cluster

- Protecting the weights, Lab Secrets and Espionage

- Alignment and Super Alignment

Racing to the Trillion Dollar Cluster:

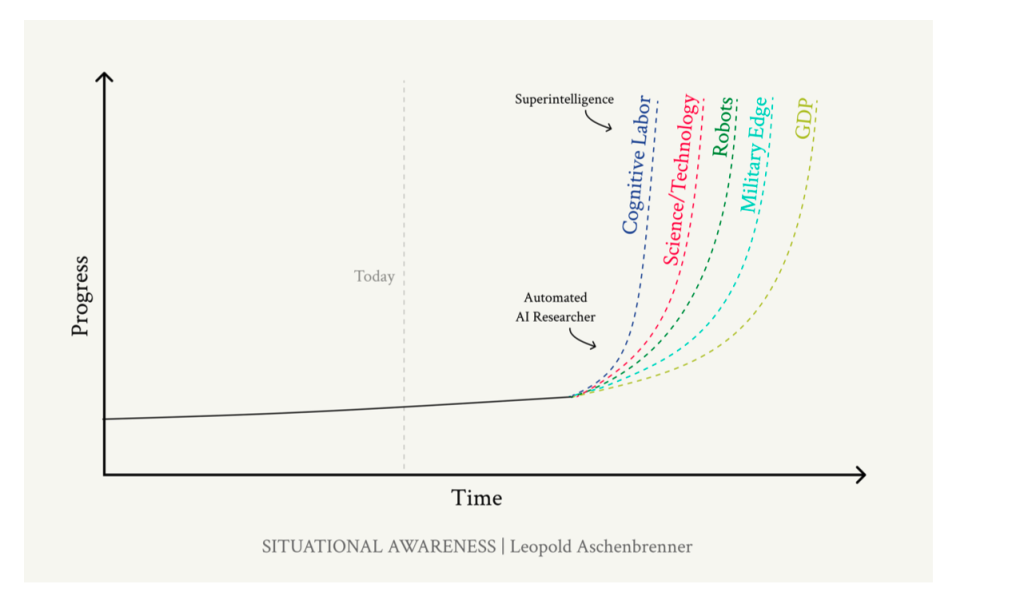

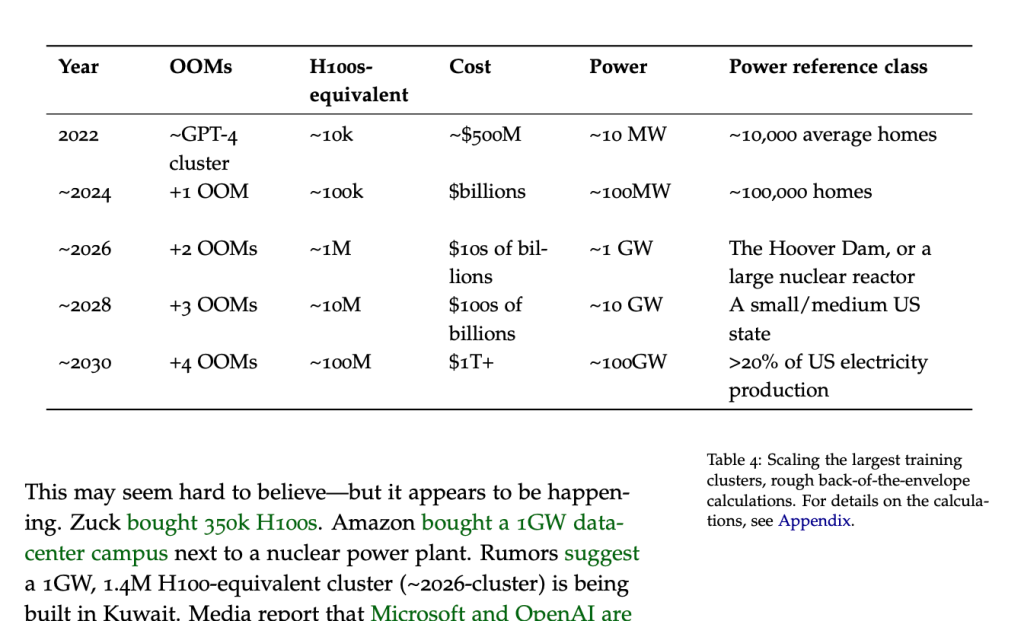

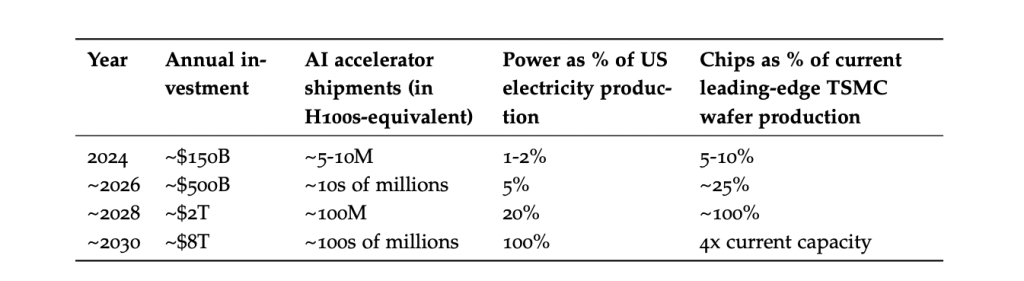

- On track to a Trillion Dollar Investment: (money and energy etc) required to get us to AGI within the decade is disturbing – the author references a crude ‘trillion dollar cluster’. A trillion dollars worth of resources into training and launching advanced AI systems into the world. A trillion-farking-dollars ! More money than the cost of the International Space Station, the Large Hadron Collider, and every Olympics ever hosted, combined. Perhaps more disturbing than this amount, is the fact that the wheels are in motion. The biggest, highest earning companies in the world are now, more or less, fully committed to building AGI; it’s imaginable that we could make this happen within the decade. Earlier in the year, a lot of people thought Sam Altman (Open AI CEO) was crazy for throwing around a 7 trillion dollar required figure to advance AI to its full potential. These numbers no longer seem so crazy [11]

- The revenue will justify the spend: it’s possible we’ll see a $100b revenue from just AI by 2026 – meaning AI will contribute around a third of the revenue of Microsoft, Facebook, Google, Open AI etc. 2026 seems a tad ambitious, but it actually feels plausible considering how aggressively each of these companies are pursuing and pushing AI. In addition to growing revenue from AI, a remote drop-in worker will potentially send tens of thousands? of white collar jobs on permanent vacation and free up trillions of dollars of salary expenditure for these companies? A trillion dollars would be roughly 3% of US GDP, and there are plenty of examples throughout history of economies mobilizing much larger chunks of money to direct towards important things. [12]

- Energy targets and chip manufacturing: In a similar way, it also seems plausible we’ll hit the required energy targets (napkin math = 20% of US , energy companies are starting to get excited (“both the demand-side and the supply-side seem like they could support the above trajectory”), Google, Amazon, and Microsoft are investing in data centers located near abundant energy sources. – though it will be challenging – we’ll have to redesign energy policies and explore some large scale energy requirements. Some people talk about concerns with chips, but it seems less likely to be a constraint than energy. Having the chip manufacturing onshore will be less important than having the data centres on shore. The implications for company like NVIDIA and TSMC, huge. [13 + 14]

Security

- Weights and Secrets: Think of the ‘weights’ of current LLM’s as a digital cookbook storing all the learned knowledge of the model – billions of recipes developed, refined and ready for upload. If you have the book, you have all the recipes; the ability to easily upload the model and all the work that went into training it. Currently, the weights of models like ChatGPT 4 aren’t that important – ie, uploading the current cookbook won’t give you the power to create a biological weapon of mass destruction. But at some point, when we get further along the intelligence spectrum, it will. For now, what are important are the ‘secrets’ held in the heads of a small number of AI researchers, supposedly ‘casually’ discussed at San Fran dinner parties. Secrets which could plausibly jumpstart a huge number of questionably intentioned entities on the path to superintelligence. [15]

- State level espionage: is being severely underestimated, and soon there will be immense forces attempting to steal these secrets (and soon the weights) – many already think the labs have been infiltrated by competing state intelligence agencies and the likes of the CCP (Chinese Communist Party). [16]

Alignment and Super Alignment

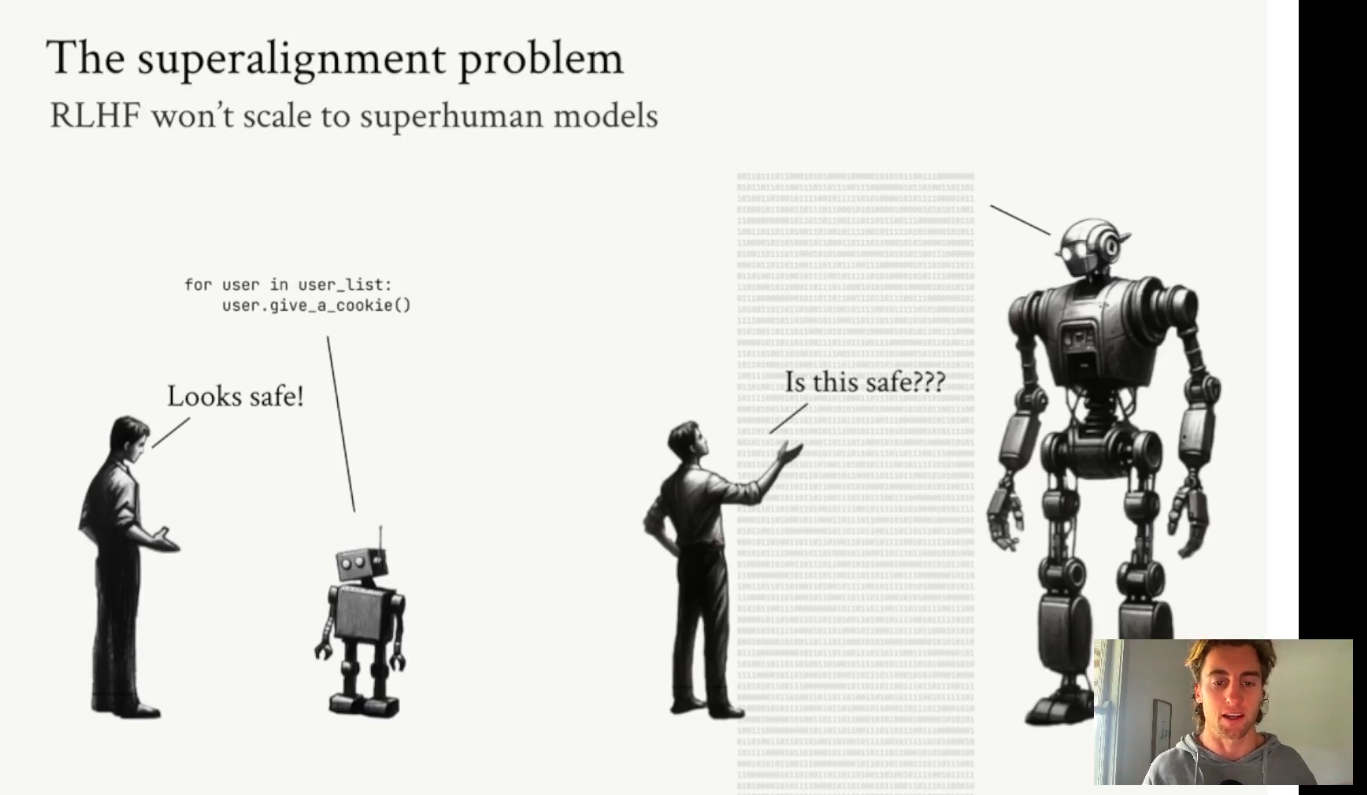

- What is Alignment? Alignment is the idea that we will be able to ‘align’ Artificial Intelligence with human values – ‘guardrails’, if you will, to ensure the development of Artificial Intelligence remains safe, reliable and committed to the best interests of its feeble creators – us. [17]

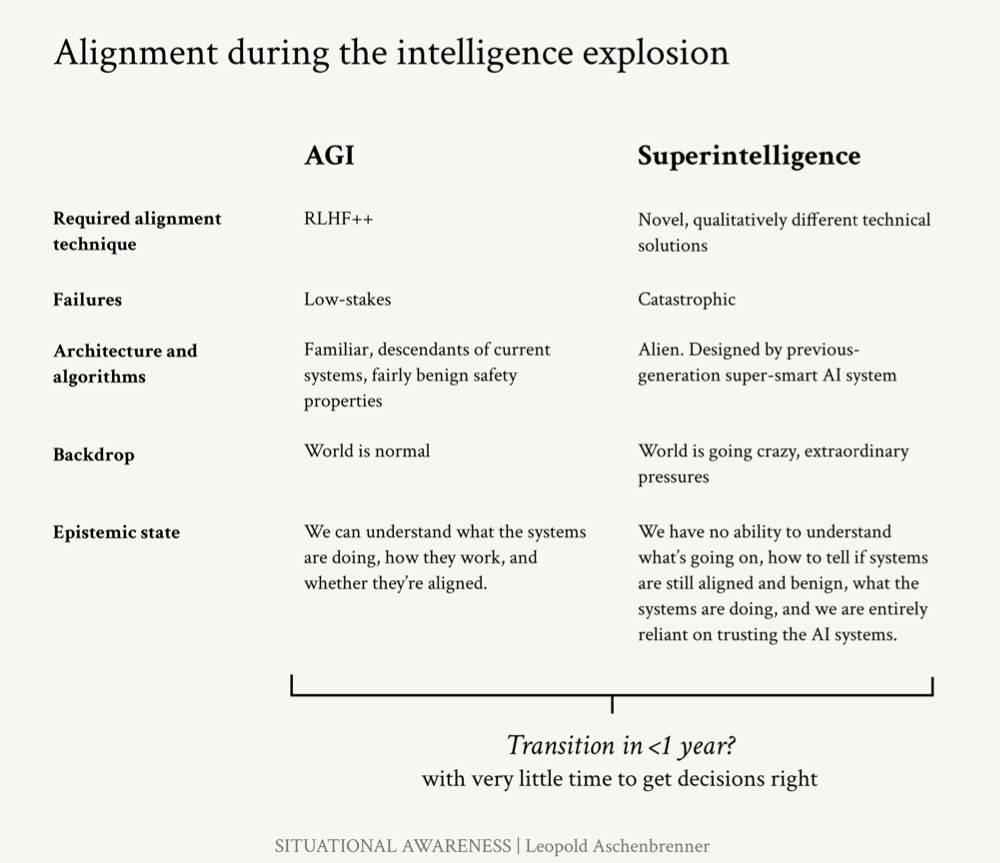

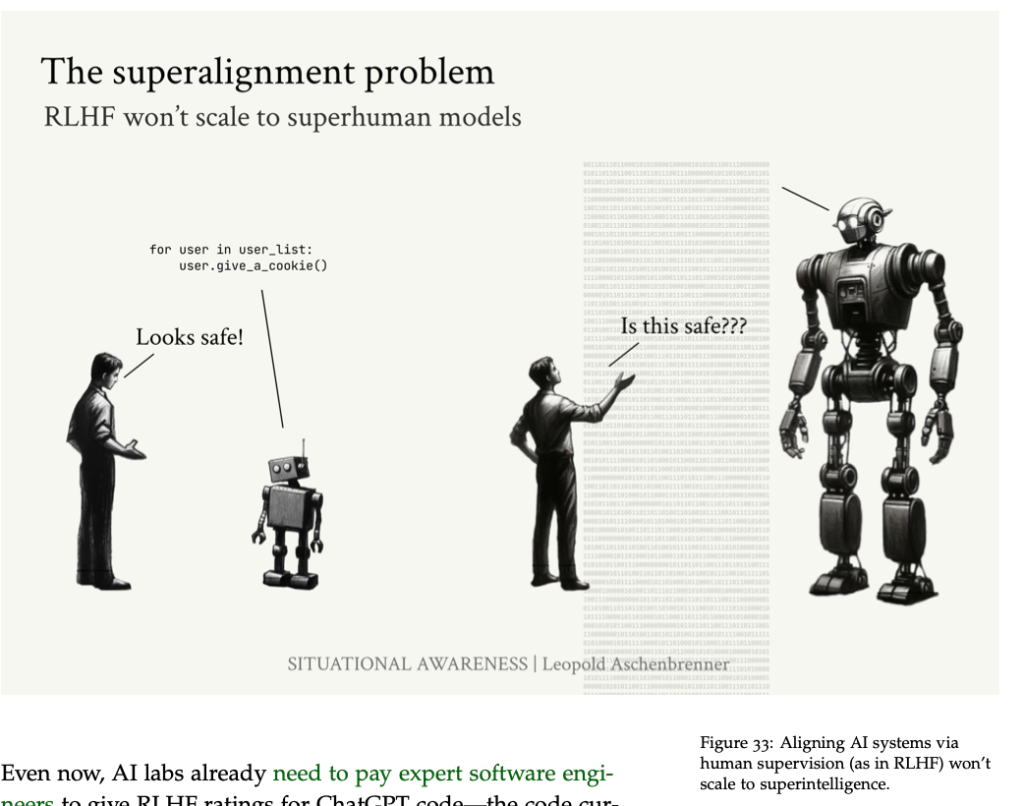

- Alignment is possible, but very hard : The author believes that alignment is ultimately possible, but there are two major caveats – the required evolution of the ‘guardrails’ as the intelligence progresses, and the concerning rate of change. Initially, it will be relatively easy to set alignment guardrails. But it’s very likely that within a year or so, we’ll be attempting to align systems far smarter than they are now, at which point it will become increasingly difficult to set appropriate guardrails. [18]

- Interpretability: There’s a bunch of exciting research happening at the moment in the field of ‘interpretability’ – which is basically attempting to reverse engineer and understand the process and patterns responsible for creating outputs – as an example, Anthropic recently dropped a paper titled – ‘Mapping the Mind of a Large Language Model’. They were able to find repeatable patterns, (or in brain-speak, similar neurons firing) when discussing related concepts. When “looking near a feature related to the concept of “inner conflict”, we find features related to relationship breakups, conflicting allegiances, logical inconsistencies, as well as the phrase “catch-22”. [19]

- We’ll need new and innovative forms of Interpretability and Alignment (the Superlignment problem)17: While the author acknowledges the exciting work in this type of interpretability, he reflects that fully reverse engineering increasingly intelligent systems may leave us up shit creek without a paddle – ie, we will need to employ more innovative forms of interpretabiltiy, ultimately replying on the systems themselves to help us understand how they work and ensure alignment. [20]

Chapter 4: The ‘Project’ (The AGI Arms Race)

- The AI Arms Race: Imagine compressing a century’s worth of military technology advancements into a decade. It would be like sending an army of fighter jets, automatic assault rifles and precision guided missiles to meet an opposing force of horse-back cavalry with bolt-action rifles. This is the potential military advantage given to whichever team is able to first harness Artificial General Intelligence. Currently, the US and allied forces are ahead in the race to Super Intelligence, [21]

- A matter of National Security: The author shares that while he is not an advocate of heavy government oversight and wishes the technology was used for the betterment of society, it seems almost inevitable that Artificial Intelligence will become a matter of National Security – the push towards AGI will then look less like an open technology startup race and more like a locked down relationship between Lockheed Martin, the Military, the Government and a select one or two highly regulated AI labs pushing the development of new and highly effective WMD’s (Weapons of Mass Destruction). [22]

- Open Source is a FairyTale: ‘Open Source’ development of Artificial Intelligence might play a lesser role in developing lagging variations, use cases and models – but in terms of cutting edge development and the main race to AGI, it’s a fairytale. [23]

- The Manhattan Project: The whole situation is eerily reminiscent of the Manhattan Project – the massive, secretive, and resource-intensive effort to develop nuclear weapons during World War II. [24]

Final notes – what if they’re right?

- 2030’s will be insane: Before the end of the decade, we’ll have super intelligence, and the 2030’s (if we make it) will be fucking wild. [25]

- The AI Realist Camp: There’s a heated debate within AI at the moment that tends to split the audience into one of two camps – at one (extreme) end, those who believe AI should be stopped at all costs, as it will result in the inevitable extinction of the human species. At the other (extreme) end, those who believe we should be accelerating at all costs, toward the creation of a super-intelligent, AGI when we will seamlessly merge with machine and project our consciousness out into to the vast and unexplored cosmos.. The author reflects that much of the argument on either side of the debate is either obsolete or harmful. He proposes a new camp ‘AI Realists’ who believe (a) Superintelligence is a matter of national security (b) America must lead the race (c) we better not screw it up. [26]

Situational Awareness: What has been laid out above is ‘the single most likely scenario’ (At this point, you might think the author is crazy) but these ideas, which initially started as abstract jokes are starting to feel very real to those in Silicon Valley who are actually creating the thing. [27]